An issue that had been causing no end of trouble in our MOSS 2007 extranet environment had been when FBA users appear to have been mysteriously deleted from a Site collection. This had been happening with no administration interference from the SharePoint site administrators and at times outside of when the Profile import synchronisation timer job had been run. This proved to be a challenge to further investigate and identify the cause of the issue.

The first step was to identify what was going on under the covers of SharePoint (i.e.in the content database). On a new occurrence of the issue I identified the users FBA login and ran the SQL query below against the userinfo table of the content database. The userinfo table contains the information on the SharePoint profiles that have been created in the database. A SharePoint profile is created the first time a user logs on to the site. To show the information for this instance I will use the name ‘d.newton’ as an example FBA login name.

select * from [ContentDatabaseName].[dbo].[UserInfo] where tp_login like ‘%d.newton%’

The SQL query above returned more than one user with the same tp_login name and more importantly the column tp_lsActive was set to ‘0’ for all the ‘d.newton’ logins. This effectively means that the user profile for ‘d.newton’ is inactive in SharePoint and no access is granted to the site collection. In addition, the column tp_deleted was populated with the same value as the users profile number. After comparing the duplicated tp_login names, I found that the names appeared to be the same but when you copy the login name from SQL to notepad I found that the duplicate tp_login names had a whitespace at the end. An example is below:

Tp_id = 551 tp_login = ‘membershipprovider:d.newton’

Tp_id = 552 tp_login = ‘membershipprovider:d.newton ‘ (Single white space)

Tp_id = 553 tp_login= ‘membershipprovider:d.newton ‘ (double white space)

So how, when and why are the duplicated profiles created?? After running a number of custom reports I found that the duplicated profiles were being created by the SharePoint system account and after further testing against the custom extranet login.aspx it was discovered that the login page would accept a username with a whitespace at the end of the username ‘d.newton’ (Note: the password will need to be the same as the original username’s password). So what is happening in the userinfo table when you add the username with the whitespace? The original FBA user is made inactive with the tp_IsActive column being set to ‘0’ and a new profile is created with the same tp_login name with a whitespace and a new profile id but with no access to the relevant SharePoint site. From the user’s experience, they will not be able to see the relevant site. When the user logs in with the incorrect login, SharePoint detects that there is conflict in the users tp_login name where it is required to be distinct, so SharePoint automatically makes the original SharePoint profile inactive to resolve the conflict.

Armed with this new information, we now have the knowledge that the profile 551 with the username ‘d.newton’ with no whitespace is the correct profile and we can make this user active again from within site permissions of the relevant site.

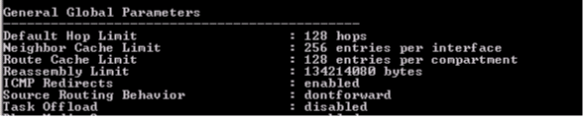

It is also useful to know that we can now replicate the issue, which is good news! However, we need to find a resolution to stop this from re-occurring. The first task I would recommend undertaking is to change the extranet’s forms loginurl to the out of the box Microsoft login.aspx and re-test the same issue. It is very likely that you will find that the Microsoft’s default login.aspx page doesn’t have the same behaviour. The reason for this is that the Microsoft login.aspx page trims whitespace at the end of the username, which means that a username conflict or duplication never occurs. The steps to change the forms login page back to the OOTB login.aspx is detailed below:

1. On your web front end server, open the web.config of your extranet site and navigate to the ‘authentication mode’ section.

2. Change the forms loginurl to “/_layouts/login.aspx” (Details below)

<authentication mode=”Forms”>

<forms loginUrl=”/_layouts/Login.aspx” />

</authentication>

In our environment it turned out to be the custom login.aspx page that was causing the issue. Our custom login page was referencing code that was not trimming the username correctly and thus allowing the duplication of profiles to be created in the userinfo table. What is annoying is that the userinfo table accepts whitespaces in the tp_login column! If you encounter the same issue as us then I would recommend either resolving your code or create a new login.aspx page based on the Microsoft OOTB login.aspx.

Removing the Duplicated Users

As we now had a number of duplicated SharePoint profiles in the userinfo table, it would be useful if the profile synchronisation timer job would clean up the duplicates or inactive users. However, I have been advised by Microsoft that the profile synchronisation timer job will only delete Active Directory inactive users and not FBA inactive users. The only supported way to remove an FBA user from the userinfo table is via powershell and the article by Niklas Goude explains it very well.